Data Privacy Impact Assessment: Defend, Prevent, and Protect

Purpose

This document is intended to give you as a privacy professional a baseline to begin your impact assessment. Pleasure ensure you factor this information when creating your own assessment, and add all the necessary components that a data controller/customer should.

Hosting Locations

Hosting locations are available to choose by a customer prior to implementation. These details can be found on our Data Storage Locations page.

Defend

Defend uses a combination of rule-based and automated monitoring that risk-scores emails and the links within them. By assessing email traffic it can advise users on the likelihood of an email being a legitimate phishing attempt.

Types of processing involved:

Warnings will appear if:

- The email originated from an external domain

- It is a first-time sender

- Authenticity checks

- Classifier matches

- Links within the email match as confirmed phishing domains/URLs

The Administrator portal will display whether a user clicked on a link. The purpose of doing so is to assess the inertia of the risk from verified attacks.

Types of personal data collected:

- Email metadata received that could result in revealing personal data by inference:

- Sender address

- Subject

- Attachment names

- Sender IP Address (geolocation where possible)

- Other recipients on an email

- Date/time of email sent

Note that emails confirmed as malicious will have selected portions of the content fingerprinted and then hashed.

It removes personal/confidential information prior to its hash and storage.

Hi James you have won £3000!!! --> Hi User you have won 0000!

then we have "Hi User you have won 0000!" and store the resulting hash.

Data sources

Email metadata (email address, which may contain forename and surname) of a data subject who is not a customer may therefore be collected where they are the recipient of a customer user’s email, or where they send an email to a customer of KnowBe4. This is fundamental to identify the likelihood of an email being an attacker/phishing attempt.

Data storage

Personal data above are stored in Defend. The content of emails is not stored unless it is identified as malicious by users or the system as a red color banner labelled Dangerous. Data that could be considered personal is anonymized using the process described in the Data Processing section below.

Data retention

Emails are kept in cache until they are sent onwards to the recipient email, then permanently deleted from the system. This is typically a matter of milliseconds.

Risk classification results of emails are stored encrypted at rest for up to 18 months, then permanently deleted from the system.

General system information that customer administrators can view in the admin portal is available for the duration of the contract.

Data use

Email contents and metadata are used to build up a risk score. The score increases as verified factors of an email indicate a stronger likelihood of a phishing attempt.

Data access

Users are able to click on warning icons added to the header of each received email. This presents the user with a webpage of additional detail explaining why a particular email has a higher/lower risk score.

Administrators are approved by the global admin and disabled by default. The use of the admin portal is managed by Role-Based-Access-Control (RBAC).

Security measures

KnowBe4 takes several measures to ensure security of the supporting infrastructure. These can be found in our DPA and on our website.

The context of the processing and nature of the relationship with organisations and data subjects

KnowBe4 has a contractual relationship with its customer organisations to provide them with Defend.

Extent to which data subjects have control over their data

KnowBe4 respects data subjects’ rights under applicable data protection legislation, and provides information as to how they can exercise their rights on the website. Further details of consideration of the impact (if any) of Defend on these are set out further below.

KnowBe4 is reliant on customer organisations to communicate with their users to explain the processing conducted by Defend.

Users are able to interact and over-ride a risk score by clicking on a threat icon and confirming whether the identified email is legitimate or not.

Artificial Intelligence

Necessity and proportionality

Industry guidance from the UK ICO on the use of AI shows that the deployment of an AI system to process personal data needs to be driven by evidence that there is a problem, and a reasoned argument that AI is a sensible solution to that problem (and not the mere availability of the technology).

The identified problem: there is a strong body of evidence that the human layer of an organisation poses a threat to security that is often overlooked. One aspect of this is the risk that it is very easy for key confidential information to accidentally or maliciously leave an organisation through the acts of clicking or responding to malicious emails.

The solution: whilst there is the simple solution of revoking email access, or to review each email manually in quarantine, neither of these options are desirable.

The use of AI: AI represents that advancement of technology. It is used to deliver intelligent advice based on an understanding of the individual user’s email behaviour, history and interactions, and likelihood to be targeted by malicious actors. This type of analysis cannot be conducted without the degree of machine learning required in order to learn whether an email is malicious.

Why AI is proportionate here: the AI, whilst in some ways not obvious to the end user, is proportionate to the aim (seeking to prevent security incidents) and we have been careful to consider not only the limitations of the information required to enable these machine led prompts, but also to consider the privacy issues caused by ancillary aspects of the tool such as email visibility. Further, the outcomes of the processing are not high risk to the individual – they are banners/prompts/email notified prompts, to check that the individual is taking care to review whether an email is authentic or malicious.

The risk to the individual comes through the admin reporting functionality and the potential for a customer admin user to infer certain additional information through the availability of the reports, or to bring internal disciplinary action if advice is ignored and that leads to an incident. The latter may be no greater than would have been discovered in any event through internal investigations resulting from a data breach.

The AI may infer data about people (i.e. their predicted email behaviour), but this is not of the kind that will intentionally discriminate or impact on the individual’s reasonable expectations – they can elect to avoid the guidance prompt if they choose. The risk of a misdirected email is increased by doing this.

Accuracy

Defend, like any risk evaluation system cannot be 100% accurate. In keeping with industry guidance (such as the UK Information Commissioner’s Office), Defend provides a statistically informed estimate as to potential risk for a given email activity such as suspicious/known sender, malicious links, or manipulative language.

The user’s response to the banners also helps guide future processing to improve the accuracy through the learnt behaviour.

Article 22 of the GDPR

Article 22 of the GDPR has additional rules designed to protect individuals if solely automated decision-making is carried out that has a legal or similarly significant effect on them.

These protections are not applicable to Defend. Defend requires meaningful human interaction for any decisions. The result of the automated processing is a visual/email prompt alerting the individual user themselves to a potential risk in the email and leaving them in control of any future action that they take.

Prevent

Prevent uses AI to help users avoid errors and accidental mistakes when sharing Content by email. By combining historic email behaviour, trends and domain hygiene analysis, it can advise users of the perceived risk level of a proposed email.

Prevent is designed to help KnowBe4 and its customers prevent data and confidentiality breaches.

Types of processing involved:

Capture email usage from a user. Check for warnings and triggers through(No personal data is captured during this exercise):

- Default Processing

- Emails involve blocked domains

- Is associated with a suspicious mail server

- Involves a recently registered domain

- User prompt suggestions depending on the email attributes

Types of personal data collected:

- Portions and metadata of emails determined by the senderHashed portions of email body

- Hashed portions of email attachments

- Attachment names

- Decisions of whether an email is sent or advice is ignored

Data sources

Email metadata (email address, which may contain forename and surname) of a data subject may be collected where they are the recipient of a customer email.

Data storage

Data is stored in Microsoft Azure at the locations listed in the “Hosting Locations” section of this DPIA.

Data retention

KnowBe4 keeps all the data collected from an organisation for as long as that organisation remains a customer (or end of a trial period). These retention periods are explained on our website. Email ingestion is stored for up to 18 months on a rolling retention period. When an organisation stops being a customer then we delete all associated Prevent behaviour data collected after the termination or expiry of their contract with us.

Data use

Email metadata is used to provide advice to users of Prevent that helps them avoid accidentally sending sensitive emails and content to the wrong recipient by highlighting actions that may be out of step with their normal sending behaviour. Performance data of the Prevent tool is used to generate aggregate statistics in a dashboard. This is designed to demonstrate the value of the product to customer administrative users

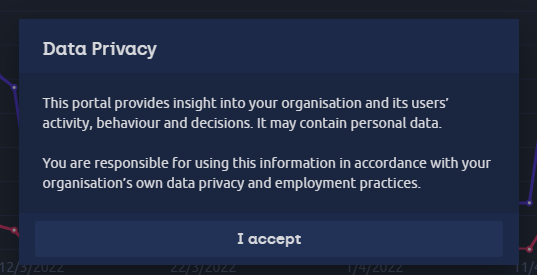

Data access

Users of Prevent have access to the results of the data processing the software performs through the advice given to them. They have no access to the data itself.

Notification

Security measures

KnowBe4 takes several measures to ensure security of the supporting infrastructure. These can be found in our DPA and on our website.

Data Collected

In addition to the types of personal data collected listed above:

- Type of advice provided

Nature of the relationship with organisations and data subjects

There is a contractual relationship between a customer and KnowBe4 to provide the Prevent tool. KnowBe4 may not have a relationship with third-parties with whom users communicate with. Whilst Prevent will collect their email addresses as part of the product, these are not (and will not be) used for communication with them.

Extent to which data subjects have control over their data

KnowBe4 is reliant on customer organisations to communicate with their users to explain the processing conducted by Prevent. KnowBe4 relies on customer organisations to communicate to third parties the fact and nature of the processing of their email data. A third party recipient may request to exercise their statutory rights under relevant privacy laws.

Extent to which data subjects are likely to expect the processing

Individual users of Prevent will expect the processing explicitly and should have been notified of the roll out of the technology by their employing organisation. Third parties with whom users of Prevent may communicate may expect that their communications with individuals at organisations are likely to be monitored and scanned for malicious intent such as malware or whether it is encrypted. People may not be aware of the purposes.

Artificial Intelligence

Necessity and proportionality

The UK Information Commissioner’s Office guidance on the use of AI shows that the deployment of an AI system to process personal data needs to be driven by evidence that there is a problem, and a reasoned argument that AI is a sensible solution to that problem (and not the mere availability of the technology).

The identified problem: there is a strong body of evidence that the human layer of an organisation poses a threat to security that is often overlooked. One aspect of this is the risk that it is very easy for key confidential information to accidentally or maliciously leave an organisation through the acts of an innocent or bad actor. The accidental sending of emails to wrong recipients, or with incorrect information, is an accepted part of this threat.

The solution: whilst there is the simple solution of requiring individuals to check their actions, or to block sending to force them to do so, both of these are not desirable.

The use of AI: AI is used to deliver intelligent advice based on an understanding of the individual user’s email behaviour, history and interactions. This type of analysis cannot be conducted without the degree of machine learning required in order to learn how user A is different to user B, and how action C could be potentially wrong.

Why AI is proportionate here: the AI whilst in some ways not obvious to the end user, is proportionate to the aim (seeking to prevent accidental sends) and we have been careful to consider not only the limitations of the information required to enable these machine led prompts, but also to consider the privacy issues caused by ancillary aspects of the Prevent tool (such as the admin reporting functionality). Further, the outcomes of the processing are not high risk to the individual – they are pop-up prompts/email notified prompts, to check that the individual actually wants to send the information (and the reasons why the algorithm believes that there may be an error that needs checking).

The risk to the individual comes through the admin reporting functionality and the potential for a customer admin user to infer certain additional information through the availability of the reports, or to bring internal disciplinary action if advice is ignored and that leads to an incident. The latter may be no greater than would have been discovered in any event through internal investigations resulting from a data breach.

The AI may infer data about people (i.e. their predicted email behaviour), but this is not of the kind that will intentionally discriminate or impact on the individual’s reasonable expectations – they can elect to avoid the guidance prompt if they choose. The risk of a misdirected email is increased by doing this.

Accuracy

Prevent is not designed to be 100% accurate. In keeping with industry guidance (such as the UK Information Commissioner’s Office), Prevent is intended to represent a statistically informed estimate as to something which may be true about the user’s intended email activity.

The user’s response to the prompts also helps to guide future processing to improve the accuracy through the ‘learnt behaviour’.

Article 22 of the GDPR

Article 22 of the GDPR has additional rules designed to protect individuals if solely automated decision-making is carried out that has a legal or similarly significant effect on them.

These protections are not applicable to the Prevent tool. Prevent requires meaningful human interaction for any decisions. Prevent is not designed to have a legal or similarly significant effects as a result of the processing carried out. The result of the automated processing is a visual/email prompt alerting the individual user themselves to a potential error in the email that they are proposing to send and leaving them in control of any future action that they take.

Protect

Purpose

Protect provides an encryption layer on top of email communication, with additional controls for email senders over the shared data, once it has left their network.

Types of processing involved

Protect is a communication tool used by senders and recipients of e-mail. The types of processing involve email and their contents, which are determined by the sender/author of emails.

The product can be used to exchange all kinds of information, the product does not perform any analysis of the content transmitted other than antivirus.

Types of personal data collected:

- During account registration, the following data is requested from users:

- Email address

- First and Last name

- Password

- Security questions and answers, to assist in automatic password reset should the password be forgotten. Not required with OpenID

- (optional) Mobile telephone number, collected for the same purposes

- OpenID can be setup for free email users of Google and Microsoft.

- In addition to this data, when registered users communicate to Protect servers, the product records IP addresses from the received requests. This includes key management requests.

- Recipients, depending on configuration, may have the ability to download messages from Web Access in unencrypted form.

Data Sources

Data and content is provided to us by users, during account registration and usage of the product to exchange content with others. This includes a user’s interactions with the software (via Web Interface, desktop and mobile application) and indirect actions, when email messages are processed by Gateways installed in the mail path by business customer’s organizations.

The data sources in use for each customer organisation and user vary amongst one or more of these.

Information about a data subject who is not a customer of ours may be collected where they are the recipient of a customer’s or user’s email, or where they send an email to such an individual. In order to subsequently access that secure email or file, that individual would need to register with us as either a free or paying subscriber providing the data referred to above.

Data storage

Data is stored in Microsoft Azure and Large File Transfer data is stored in Amazon S3 storage. Storage locations are outlined in the “Hosting Locations” section of this DPIA.

Data retention

Encrypted packages reside in High Availability blob storage with a default 90 day retention. Senders and recipients can resend packages as an attachment after the 90 day period to open@reader.egress.com to decrypt and access it.

Encryption keys associated with users are stored for the duration of the account being active.

KnowBe4’s Retention Policy is published on our website.

Data use

Package access patterns are analysed to detect possible security problems.

Data access

Only employees and contractors have limited access to the data, as is necessary to maintain system operation and based on their role. Employees and contractors do not have any access to the encrypted message contents, unless their email addresses have been explicitly added to the recipient lists by sender.

Data subjects can access the same data in the user interface, where they can see the information provided during account registration, and audit information associated with encrypted packages sent.

Data subjects can examine the account data stored about them using the Web interface of the products.

Data subjects are also able to exercise certain rights provided by law – details of these and how they can do so are set out on our website.

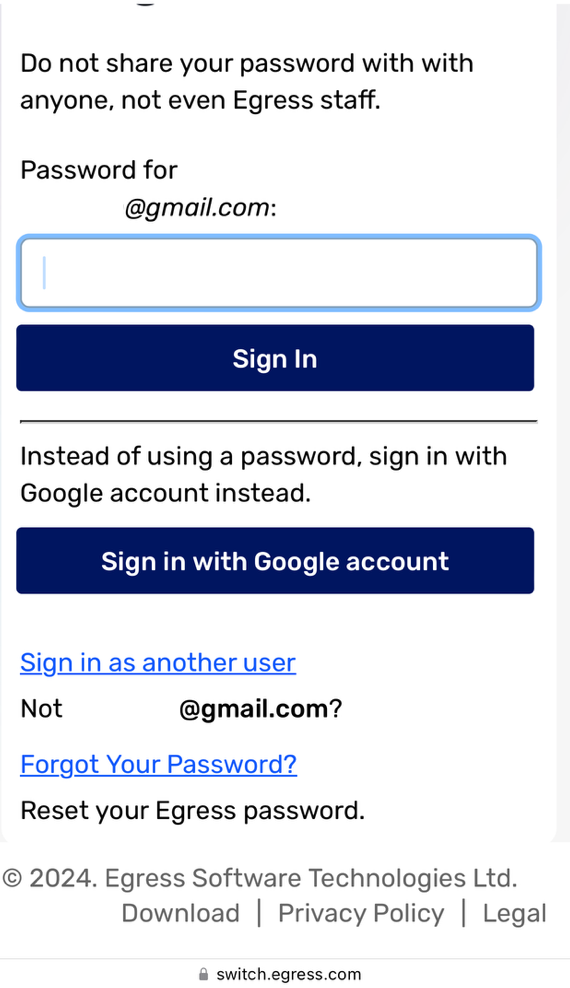

OpenID

After account creation, users of free email services such as Google or Microsoft, are able to sign in with those accounts rather than the Protect ESI account.

Security measures

See our website.

Data collected

Protect collects the following metadata about email messages that are encrypted:

- message date and time

- sender and recipients email addresses

- message subject

- list of message attachment filenames, and SHA256 hashes of the contents

- AES message key used to encrypt the message content

- time and public IP address of key registration request sent to store the key in our servers, or retrieve the key from group servers

- client software type, version, preferred user interface language used

- device identifier, used to identify the particular instance of the client software between requests

This information is stored on key management servers, and is used to display the list of messages sent to sender of the information, permitting the sender to identify individual messages.

Nature of the relationship with organisations and data subjects

We have a contractual relationship with our customers to provide them with Protect. It can communicate with individual users at those organisations through our software.

KnowBe4 servers are an intermediary between sender and recipients, the choice of recipients and content of the communications that are sent to them, are controlled by sender of the information. The content of the communication may contain any information about the data subjects, who may be senders or recipients or any third parties.

The customer organisation and user are responsible for ensuring they have a lawful basis for the transmission of the content generally and through use of Protect.

Extent to which data subjects have control over their data

All users can use their account user interface to see and modify attributes associated with their account, such as first name, last name, organization name, telephone number etc.

Also, senders may see, and partially modify, metadata of the encrypted packages they shared with others. Senders may modify the metadata, including adding and removing allowed recipients, changing message subject, revoking access etc.

Account Information may be deleted from our servers by a customer’s Administrator users. Free email users may contact our customer services team.

Recipients of content who wish to exercise their right and remove the information about them from our servers need to contact the Controller of that content, the sender user or customer organisation. This is the same as would be the case in regular, non-secure, message exchange.

Details of how users can exercise their legal rights in their personal data are also set out on our website.

Extent to which data subjects are likely to expect the processing

Individual users of Protect will expect the processing explicitly and should have been notified of the roll out of the technology by their employing organisation.

Third parties with whom users of Protect may communicate might expect that their communications with individuals at organisations are likely to be monitored - this is established practice across myriad businesses and sectors – however they may not be aware of the purposes.

Artificial Intelligence

AI is not used with Protect.